Powerful, Better, Faster: A roadmap for applying AI models to prevent banking fraud

USE THE TABLE OF CONTENTS BELOW TO NAVIGATE THROUGH THE GUIDE:

1. Introduction

Many banks are exploring the use of artificial intelligence to improve their anti-fraud systems. In this paper, we discuss the major challenges that must be overcome to successfully apply AI to prevent fraud and look at how a range of AI techniques can be combined to deliver a highly effective and adaptable solution. Any AI-based system must be capable of combining the best of machines and humans: intelligent automation that continually learns from the experience and insights of its human users. We explain how human expertise can be injected into the AI models to refine their sensitivity and improve performance, and how insights derived from the experience of other banks can be harnessed to create network effects that benefit all users.

The paper also acknowledges the increasing regulation and scrutiny of all AI-based systems and sets out an approach to ensuring AI-based fraud solutions are transparent for their operators. This not only makes certain that the solution conforms with evolving regulatory standards, but also allows human users to understand how and why the AI models arrive at their recommendations.

This is critical because decisions on fraud cases must ultimately be taken by human experts, not computers. The value in applying AI in fraud prevention is to identify the highest-risk transactions and provide key information about them to help members of the fraud team take better decisions more quickly.

The aim is to augment human intelligence, not to replace it with an artificial version. If this is achieved, very large gains in performance and efficiency are possible.

Background

AI and intelligent automation are ubiquitous. When you start typing into Google’s search bar, AI tries to predict what you are looking for. When a song ends on Spotify, AI suggests another you might enjoy. When you contact a utility company, AI powers the chatbot that deals with your request. As AI algorithms improve and the price of computing power continues to fall, AI-based services are spreading into many more areas of business and everyday life.

Some 20 years since the first academic papers were published on AI’s potential to detect fraudulent banking transactions, systems applying this technology are now in use in a growing number of banks. These systems monitor banking transactions in real time, blocking those that are judged likely to be frauds in the milliseconds before funds leave the victim’s account.

Fraud prevention solutions based on AI are gradually superseding earlier versions that try to detect frauds by applying a set of predefined rules to analyze transactions. Systems based on rules engines successfully detect a lot of frauds, but because the number of rules they can apply is limited, they cannot be made sufficiently sensitive to detect the full range of fraudulent behaviors.

They therefore produce large numbers of false alerts that result in a poor banking experience for customers and waste a lot of staff time on needless investigations.

AI-based fraud prevention systems offer an alternative solution. Correctly designed and implemented, these technologies have become significantly better in recent years both at preventing frauds and at recognizing legitimate transactions. As a result, the proportion of false alerts is hugely reduced, delivering a better, safer banking experience for customers and allowing the bank’s internal fraud experts to work much more efficiently.

For example, in recent benchmarking tests with NetGuardians customers, the NetGuardians system reduced the number of payments flagged by 83 percent. This enables NetGuardians customers to reduce the cost of their fraud prevention operations by an average of 77 percent, using their previous budget as the baseline.

2. How AI is applied to banking fraud

It is important to understand that most AI-based solutions do not set out to detect fraudulent trans- actions. Instead, the goal is to identify transactions that exhibit high-risk characteristics. These will include an extremely high percentage of the total frauds committed, along with a small group of legitimate transactions that also display such features. These legitimate transactions might involve a first-time payment to an account in a foreign country, for example, or a large payment to a new beneficiary.

In simple terms, AI-based fraud solutions work by creating a profile for each bank customer based on the way they have used their account in the past. The system will then assess each new transaction against that model. Any that have anomalous characteristics will be identified and those that pose the highest risks will be flagged for investigation.

AI in action: finding frauds vs flagging high-risk transactions

Imagine two bank transactions. In the first, a customer sends €30,000 to their son or daughter, who is attending university in a foreign city and has opened a new account there. This is the first time the customer has paid money into that account – and in this case it is a large sum.

In the second, the customer’s computer is hijacked by malware that sends €30,000 to a mule account at the same bank branch in the same foreign city. Again this is the first time the customer has paid money into that account and again it is a large sum.

The first transaction is genuine, the second is a fraud. But from the bank’s perspective, based on the data about each transaction, they look identical. This illustrates how AI is applied to banking fraud: the aim is not to say which is fraudulent, it is to flag both as high-risk situations and enable bank staff to investigate them before any money is released.

AI-based fraud solutions capture the highest possible percentage of frauds.

The goals of AI-based fraud solutions, therefore, are to capture the highest possible percentage of frauds within the set of transactions that are flagged for investigation, along with the smallest possible percentage of risky but legitimate transactions.

Video: How artificial intelligence helps banks to fight fraud

This video demonstrates how AI helps financial institutions to prevent banking fraud.

3. What does a good AI solution for banking fraud look like?

Applying AI successfully to banking fraud involves training algorithms to identify certain characteristics indicative of high-risk transactions within a data set. This is challenging to do in the banking context because, on the one hand, bank frauds represent only a small minority of transactions. On the other hand, there are many different types of banking fraud and millions of customers, each with their own typical pattern of behavior.

Because bank transaction data sets contain only small numbers of frauds compared to the genuine ones, training the algorithms to work effectively is difficult since it is hard to provide them with a wide enough range of fraud types to become sufficiently sensitive and accurate. This creates a risk that the AI becomes highly proficient at recognizing types of fraud that it has seen before, but is unable to detect other types not encountered in its training. This is known as “over-fitting.”

AI does not offer a single, “silver bullet” to overcome these difficulties. Instead, to address the challenges of the small numbers of frauds in bank data sets in comparison to genuine transactions, the wide range of potential fraud types and the variability of bank customers’ behavior, AI-based systems must combine a range of techniques, using the strengths of each to deliver a single fraud detection solution.

Step one: Identify a sub-set of transactions that have unusual features

The first stage of the process requires “unsupervised learning”1 to detect anomalous transactions. The algorithm is fed with raw banking data, in which the frauds have not been highlighted, and is allowed to analyze it for itself. During this process, the algorithm looks for patterns in the data set and identifies those that do not fit into the logical structure it has developed. It also computes the level of risk associated with these anomalies by examining a large number of parameters such as the time at which the transaction takes place, the location, the beneficiary, the value, and the currency involved. The unsupervised learning process also groups transactions according to their similarities, allowing the algorithm to compare a customer’s behavior with their peer group to gain a more reliable indication of how risky anomalous transactions in fact are.

As a result of this first phase, the system identifies a set of anomalous transactions that will typically represent 5 to 10 percent of the original data set. This group will include frauds but also legitimate transactions that have unusual features, indicating they are higher risk.

A further level of refinement is needed to increase the accuracy of the system and reduce false alerts. The anomalous transactions detected using unsupervised learning are therefore fed into the next phase of the process.

1NetGuardians’ unsupervised learning involves a wide range of techniques, from simple statistical analysis to AI approaches including Markov chains, Poisson scoring, peer group analysis, frequency analysis, clustering etc.

CASE STUDY

Social engineering

The fraudster impersonated a customer of a bank in Switzerland and asked the bank employee to arrange a transaction of CHF 1 million. The bank employee was deceived into believing that the fraudster was the customer and validated the transaction.

Solution: Although the case involved a new client with little historical data, NetGuardians blocked the payment because the transaction was unusual at the bank level and the beneficiary account was also unusual.

Step two: Refine the analysis to distinguish frauds from legitimate transactions with high-risk features

CASE STUDY

Account takeover using phishing

A fraudster used phishing to introduce malicious code into the Swiss victim’s computer and acquired their e-banking credentials. The criminal then took over the victim’s account and attempted to make an illicit transfer of CHF 19,990.

Solution: NetGuardians stopped the payment as several factors did not match the customer’s profile, including the size of the transfer, the new beneficiary and bank account, as well as the unfamiliar screen resolution and browser used by the fraudster.

The second stage involves “supervised learning,”2 in which the algorithm is trained using transaction data sets in which the frauds have been labelled, allowing the algorithms to learn to identify trans- actions that exhibit the highest risks.

This stage of the process is difficult because bank frauds make up such a small percentage of overall transactions. However, by examining only the anomalous transactions resulting from the unsupervised learning phase, the system can work on a data set that has a much better balance between frauds and legitimate transactions. This makes it possible to use supervised learning models to distinguish between them.

Different techniques and models are required to address different types of fraud and to adapt to the various situations encountered in financial institutions. Distinguishing frauds from legitimate payments on credit card transactions is a completely different type of challenge to identifying compromised digital banking sessions.

In this phase, the system uses supervised learning to progressively filter out the legitimate transactions and – ideally – leave only the frauds in the set of payments flagged by the system. These are the transactions that must be blocked in real time so that they can be investigated.

Combining supervised and unsupervised learning techniques creates a well-balanced AI fraud solution for use in banks and, crucially, overcomes the difficulties presented by the low concentration of frauds in raw transaction data sets.

2NetGuardians’ supervised learning techniques include Random Forest, XGBoost and sometimes also simpler models such as the Newton method.

Using peer groups to understand large, infrequent purchases

Anomaly detection involves examining features of each transaction such as time, counterparty, location, size and currency. But looking only at single customer transactions in isolation will not provide enough information to prevent unacceptably high levels of false alerts. By including peer-group behavior, false alerts can be reduced. For example, if a bank customer buys a car outright, it will involve making a large payment to a beneficiary they may not have dealt with before. This would tend to indicate a high-risk transaction, yet the customer will not expect their bank to block the payment. By examining the customer’s behavior alongside a large group of their peers, the size of transaction and the beneficiary can trigger associations that indicate that the payment is not as risky as it first appears and does not need to be blocked.

Step three: Reinforce the AI system with the expertise of human fraud specialists

Any system that attempts to operate without harnessing the expertise of the bank’s human fraud experts will fall short. To enable the insights of human fraud specialists to be fed into the AI models and improve their accuracy, the system needs to receive feedback from the human fraud specialists investigating the suspicious transactions it has highlighted. The inclusion of “adaptive feedback” is the third stage of the process.

Adaptive feedback comes into play when the AI-based solution flags a transaction for review by a member of the bank’s fraud team. The system prompts the investigators to provide feedback on every transaction flagged as suspicious, whether it turns out to be a fraud or a false alert. If, after review, it emerges that the alert was a false positive, the system asks bank staff to classify the transaction as high, medium or low risk. On the basis of this feedback, the system is trained to focus on transactions similar to those that the human experts classify as high or medium risk, which will require manual verification, and to avoid flagging low-risk transactions.

Input from the bank’s fraud team on every alert is extremely valuable in refining the system’s AI models. In addition, the system automatically queries the feedback it receives from the fraud detection team to ensure it is of high quality. For example, a fraud investigator may have learnt from experience that the most important indicators of risk in a transaction are the amount and the fact that the counterparty is a first-time beneficiary. But if they pay close attention only to those features, over time the feedback they provide will lead the algorithm to concentrate excessively on them as well and ignore all other features. This will increase the risk of “over-fitting” explained above and so reduce the system’s accuracy and sensitivity.

To counter this potential problem, the system must analyze and determine the quality of the feedback provided and prompt the investigator to consider all aspects of the transaction under review.

Incorporating this process of “active learning” through adaptive feedback into the AI solution further reduces the rate of false positives generated while minimizing the risk of missing a fraud. It also delivers a system which progressively learns from the feedback that its expert bank users provide, creating a solution which is more effective in tackling fraud than either human or machine on its own.

CASE STUDY

Telephone-based account takeover

A fraudster impersonating a bank employee persuaded a customer to disclose their e-banking login details. The fraudster then took over the account and attempted to transfer £21,000 to an illicit account.

Solution: AI-based risk monitoring software blocked the transaction due to unusual e-banking and transaction characteristics, including the unusual amount, screen resolution, beneficiary bank and account details, e-banking session language, and currency.

CASE STUDY

Authorized push payment fraud

Using impersonation techniques, the fraudster convinced the bank customer to transfer €125,000 to an illicit account in Spain.

Solution: NetGuardians’ AI blocked the transaction because certain variables did not match the customer’s profile, including the date when the transfer was initiated, the destination country, beneficiary account, order type, and currency.

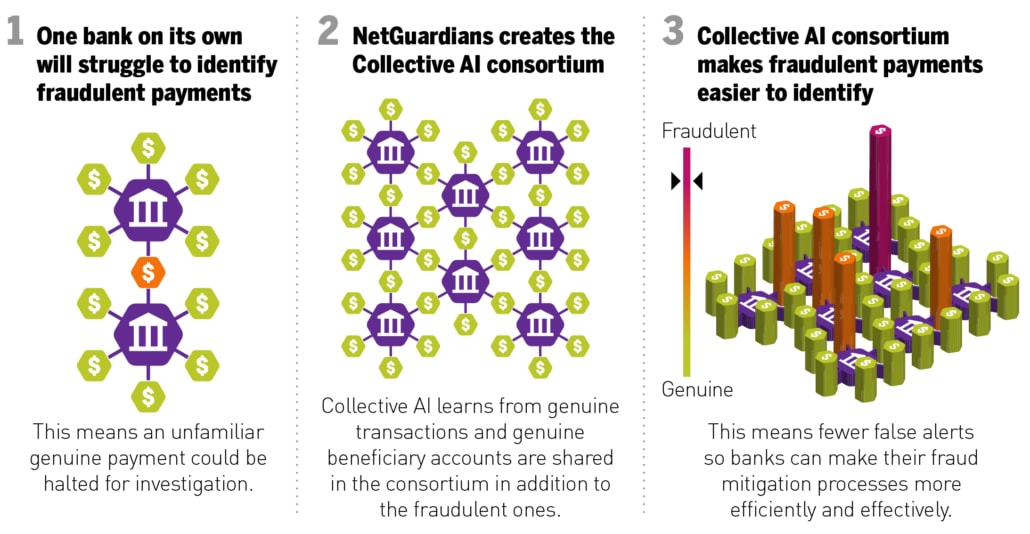

Unlocking network effects through Collective AI

Combining human expertise and machine performance to create a more effective anti-fraud solution is one key challenge in applying AI to banking fraud. Another which is just as important is to enable banks to share information in a secure and compliant way so they can benefit from each other’s experience in detecting and preventing frauds.

Historically, banks have been extremely reluctant to share data due to concerns over competition law, client confidentiality and liability. However, there are ways to overcome these difficulties, using “Collective AI.” NetGuardians has effectively created a consortium of organizations that use their AI-based fraud solution and can therefore take advantage of a network effect – each institution that implements the solution benefits from the insights of all other users.

Collective AI shares statistics on legitimate transactions across the banks that are part of the consortium. Confidentiality is maintained because only statistics on transactions are shared, rather than the raw transaction data itself. These statistics help the AI models deployed within the banks to expand the pool of data they are analyzing, based on the information provided by all members of the consortium.

For example, many banks and bank customers make payments to the same counterparties or beneficiaries. But any analysis or profiling of the recipient done by a bank acting alone will be based only on its own information. Another bank that needs to make a first-time payment to the same beneficiary will have no information of its own to refer to. Understanding whether any of its peers dealt with that beneficiary before and concluded that it is a trusted counterparty or a low fraud risk is extremely valuable. Collective AI enables the second bank to gain that insight and so benefit from the collective experience of its peers without compromising privacy or data security.

The results benefit everyone: the performance of the AI models operating in each of the banks that are part of the consortium is improved as insights generated across its members are fed into each separate system.

The confidentiality and security built into this system by sharing statistics rather than raw data is critical, since regulation of AI is becoming increasingly stringent. For example, in April 2021 the European Commission published proposed legislation3 to govern the use of AI-based systems, including those in use in financial institutions. Banks alongside other organizations will be required to show that their systems conform to the new legislation, establish a risk-management system, provide detailed technical documentation of their system, maintain system logs and report any regulatory breaches.

3https://www.finextra.com/blogposting/20431/upcoming-ai-regulations–and-how-to-get-ahead-of-them

Making AI explainable

The European Commission’s new rules will impose transparency requirements on organizations that deploy AI-based systems using statistical and machine learning approaches. The Commission’s Joint Research Centre has called for “explainability-by-design” in AI, highlighting the importance of incorporating explainability into the design of AI systems from the outset, rather than as an afterthought.

Transparency is vital for bank staff as well as the general public: too often AI is treated as a “black box.” But AI-based fraud prevention solutions require a final decision from a human operator on each potential instance of fraud. If bank staff are to trust the technology to help them make those decisions, they must be able to understand why the system has flagged a transaction as suspect – irrespective of whether it is a fraud or a false alert. They must also be able to explain to the customer why a transaction on their account has been blocked. And bank staff are ultimately accountable to customers, to colleagues and to regulators for these decisions. For all these reasons it is critical that they understand why their automated systems have flagged a particular transaction.

It is essential therefore that each risk model used to screen the various features of a transaction has a corresponding dashboard. This will explain visually why a transaction has been blocked, highlighting the features of it that have raised the alarm. In-built explainability like this is critical if machine automation and human expertise are to be successfully combined.

NetGuardians ensures explainability by providing dashboards that typically show a simple statistical representation of the conclusions that the AI-based risk model has reached. Thus the system allows its human users to gain valuable insights into suspicious transactions and their customer context. This helps the bank’s fraud team to gain an understanding of how the risk models behave and why they flag certain transactions for review.

Ultimately, AI is only the first line of defense against fraud – the final decision always rests with human fraud specialists. Explainable AI is important to create confidence among human users in the technology they are using. But its relevance goes wider than that. It also has a vital role to play in augmenting the information and insights available to users in taking their decisions. AI cannot and should not replace human intelligence. It is as much about Augmented Intelligence as it is about Artificial Intelligence.

CASE STUDY

Investment fraud

The victim was advised by a fraudster impersonating a business partner to invest in a fictitious company and ordered a payment of $170,000 to an account at a bank in Bulgaria.

Solution: The AI blocked the payment because several variables did not match the victim’s profile, including the unusual destination country, bank, beneficiary account, amount, and currency.

Explainable AI is important to create confidence among human users.

4. The practical challenges for banks in harnessing AI for fraud prevention

AI can deliver high-performance fraud prevention solutions that can significantly reduce banks’ anti-fraud operating costs. But in most cases, these fraud detection solutions require significant in-house resources to enable them to operate.

The largest Tier 1 banks often have the resources to build their own systems and in-house data science capability to capture the benefits of the technology. This process typically involves major implementations and requires dedicated teams to manage and continuously update their systems. The skills required to do this are rare and expensive, and even within the biggest organizations there are competing calls on data scientists’ time, fraud detection being only one among many.

For smaller Tier 2 and 3 banks, hiring enough top-quality data scientists to build an in-house AI capability is extremely difficult and does not usually make economic sense. A plug-and-play AI solution is therefore essential. It must integrate quickly and seamlessly into the bank’s internal systems, be trained on the bank’s data and fully deployed within weeks.

In addition, any plug-and-play solution must be continuously supported by the provider’s engineers and data scientists to ensure its AI models are updated regularly and retrained on the bank’s data to keep up with changes in customers’ banking behavior. All AI models are vulnerable to “data drift.” This risk occurs because the channels people use to carry out their banking change over time – for example as they move from using e-banking to mobile apps – and their spending habits also evolve. These factors create changes in the data they generate which the algorithms must keep up with. The AI systems must therefore be capable of being efficiently retrained periodically to allow for “data drift.”

Equally, the AI solution must be updated regularly by the supplier to optimize for emerging fraud risks, and to implement additional AI techniques over time. Criminals are constantly changing their approach as consumers alter their behavior and new lines of attack open up. The idea that every Tier 2 or 3 bank can support a specialist data science team to track these new fraud types and develop solutions independently is unrealistic. Small banks cannot match the specialist expertise and focused investment of AI-focused fintech providers.

CASE STUDY

Technical support scam

The fraudster impersonated a Microsoft tech support worker and called the victim. Through social engineering, the perpetrator managed to obtain enough information about the victim’s e-banking credentials to attempt to transfer $7,500 to an illicit account in Lithuania.

Solution: NetGuardians’ AI risk models stopped the transaction because its features did not match the customer’s profile, including the unusual currency, type of transaction, beneficiary account details, and country of destination.

The evolution of anti-fraud AI: fake invoice fraud

Fake invoice fraud is a growing problem for banks and their customers. Criminals will send a fake invoice to a company, with small details of the beneficiary altered, in the hope that the company will inadvertently settle the invoice without noticing changes in the payee’s details, such as the beneficiary account number. Such changes are difficult to detect using existing AI approaches, but simply blocking all payments to new and unknown beneficiary accounts will create huge quantities of false alerts.

A more effective way to address this problem is to use a different branch of AI – Natural Language Processing – to analyze the text of the beneficiary’s address and compare this with the beneficiary account to detect any mismatches between them. This is difficult to do, however, because the address shown on the invoice may be incomplete and contain innocuous errors because it has been written by a human, as well as deliberate errors designed to mislead. The AI must therefore match an incomplete address or one that contains human errors with the correct complete address connected with the account ID to detect anomalies.

5. Conclusion

CASE STUDY

Privileged user abuse

An IT administrator at a bank in Tanzania took advantage of back-end user privileges to inflate account balances for an accomplice by a total of $22,000. The intention was to withdraw the funds from ATMs and via mobile banking, but the fraud was detected and the money never left the bank.

Solution: The software detected that the privileged user checked the accomplice’s account several times over a period of days and flagged the behavior as suspicious.

AI has great potential to make the detection of banking fraud faster, more effective and – by eliminating increasing numbers of false alerts – much more efficient. However, implementing these systems effectively is challenging, especially for smaller banking institutions that do not have access to large numbers of in-house data scientists and engineers.

Detecting frauds in bank data sets while minimizing false positives is a particularly difficult problem for AI-based systems: the low concentration of frauds in comparison to the genuine transactions in bank data sets provides very little information with which to train AI-based models. At the same time, the number of different fraud types is large and bank customers exhibit a wide range of behaviors that the system must learn to recognize and accommodate. Only the largest and best-resourced organizations will be able to refine and implement these systems internally. For most, a fully supported plug-and-play system developed specifically to address banking fraud will prove the only realistic option.

Ideally, any such system should incorporate secure ways for statistical information on suspect transactions to be shared between different banks use the system, so all can benefit from access to a wider pool of information. But these complex AI-based systems must also be fully explainable to their users and the public. Regulation of AI-based applications is becoming stricter, and banks must expect to face increasing scrutiny in future over their use of AI.

NetGuardians’ plug-and-play AI solution was developed specifically to target banking fraud and has been engineered to overcome the major challenges in implementing AI in this context, as described in this paper. The NetGuardians system can be trained and implemented within weeks, after which it delivers highly effective fraud prevention.

Based on studies undertaken with a range of financial institutions, the system delivers on average an 83 percent reduction in blocked payments and cuts the cost of the institution’s fraud prevention operations by an average of 77 percent.

Successfully applying AI-based fraud solutions in banking involves overcoming a range of challenges that many banks will struggle to address alone. But working with the right partners to combine AI models with human expertise can unlock major gains in performance, efficiency, and customer satisfaction.